Human vision-based technology for real-time processing of wide-angle aerospace video signals

The limited radio transmission bandwidth seriously limits the ability to use wide-angle video in technology. This problem is caused by video compression techniques currently being substantially sub-optimal for large field-of-view, leading to video transmission not being fast enough. Our idea aims to create a new technology that will allow drone pilots to have human vision in glasses in real-time beyond the line of sight.

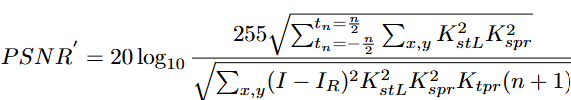

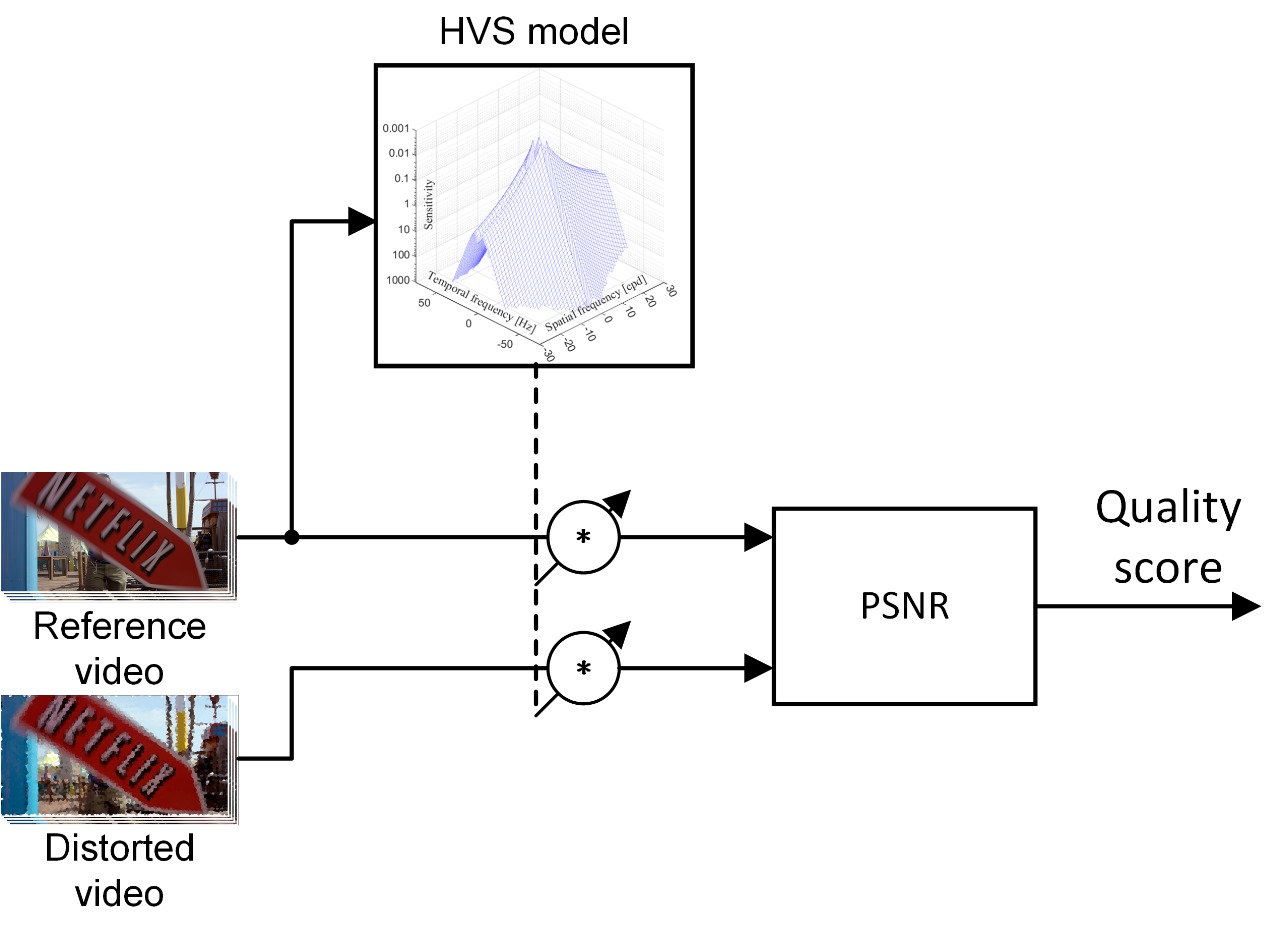

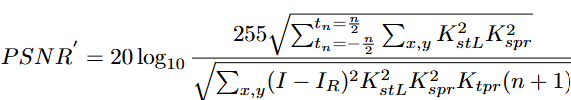

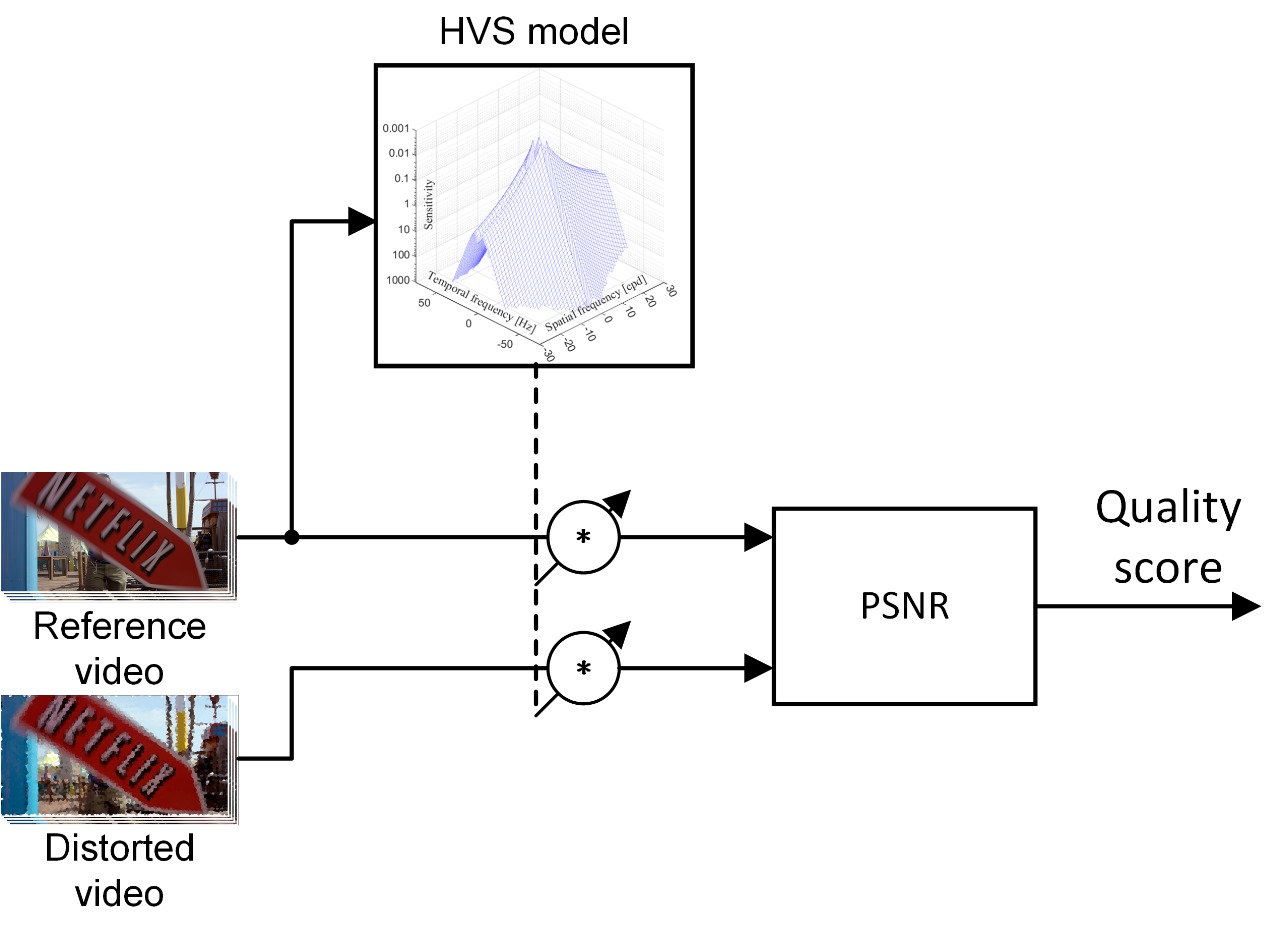

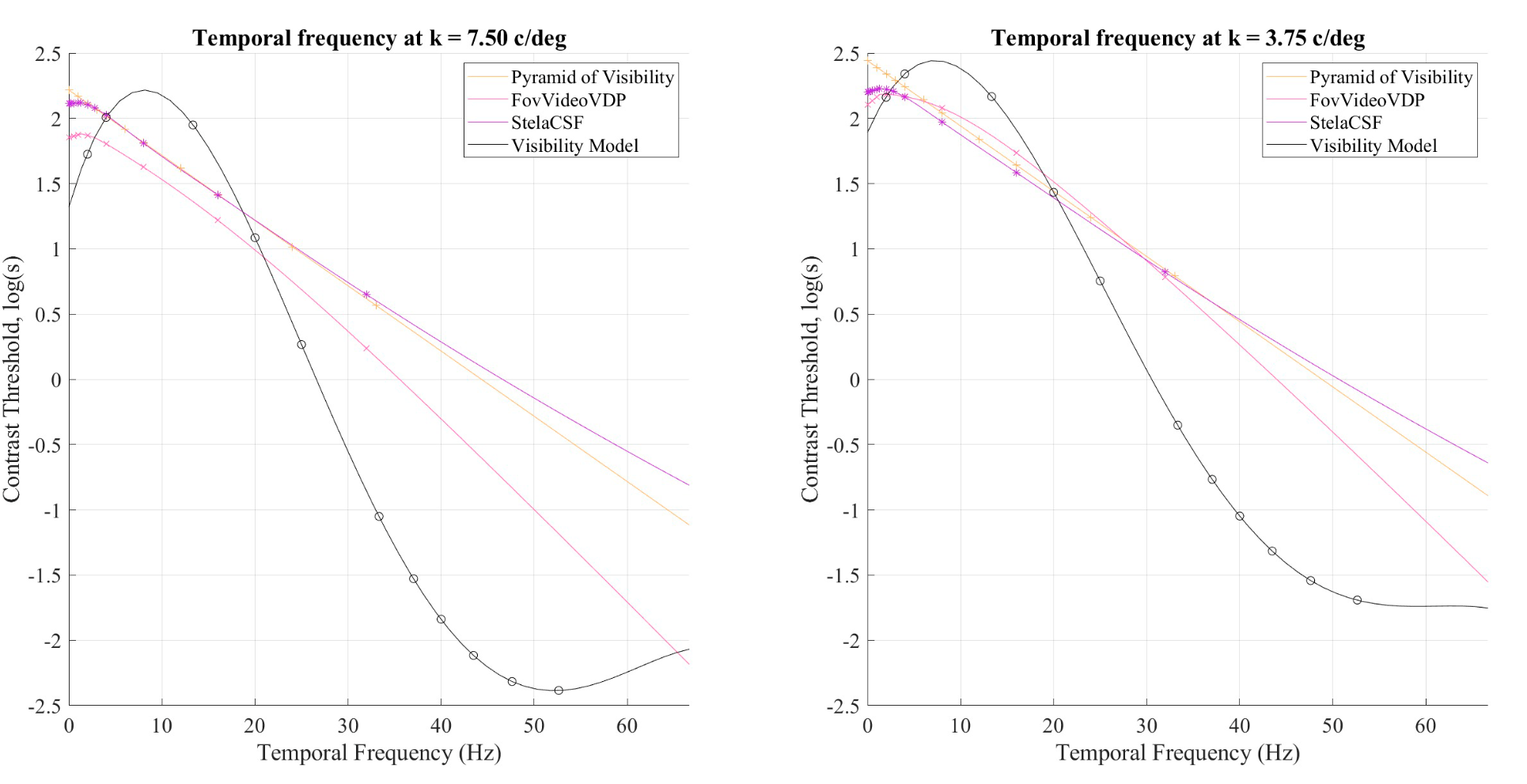

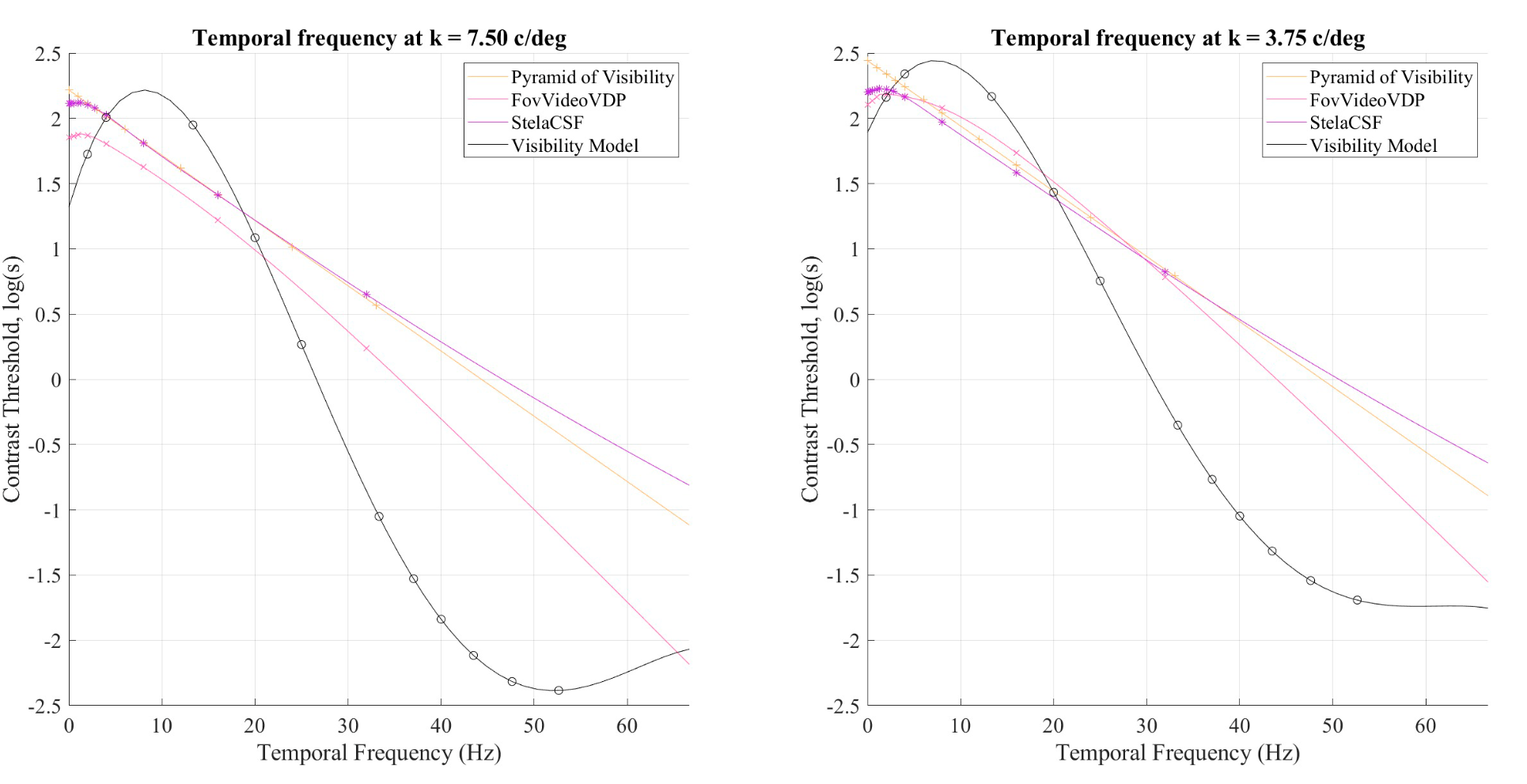

Video quality metric compatible with PSNR considering recent knowledge of human vision

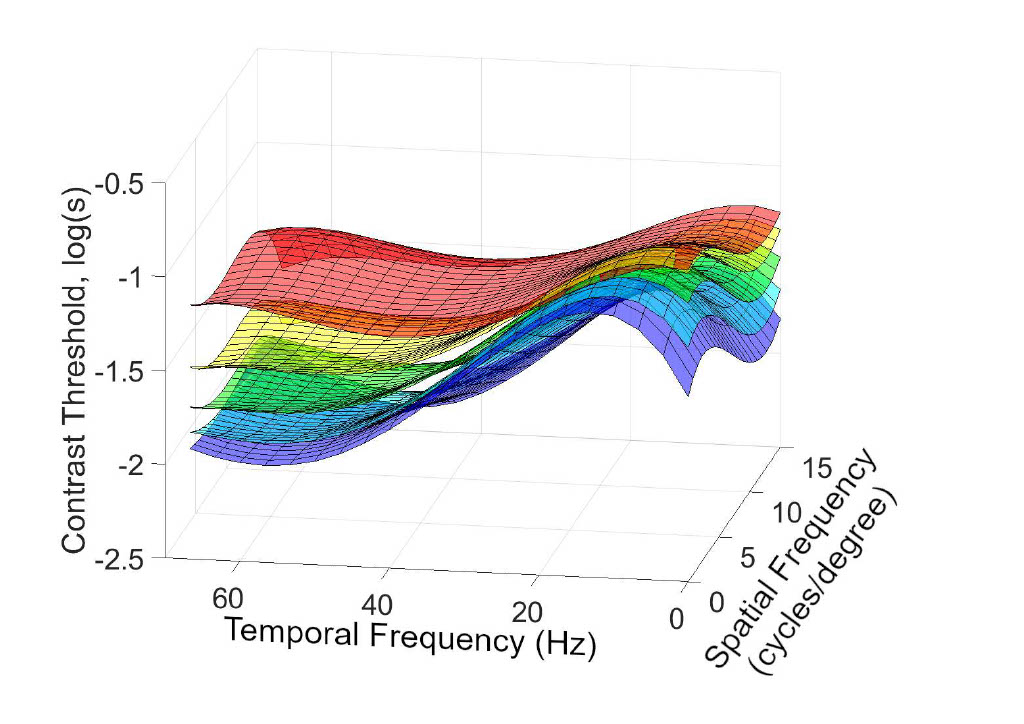

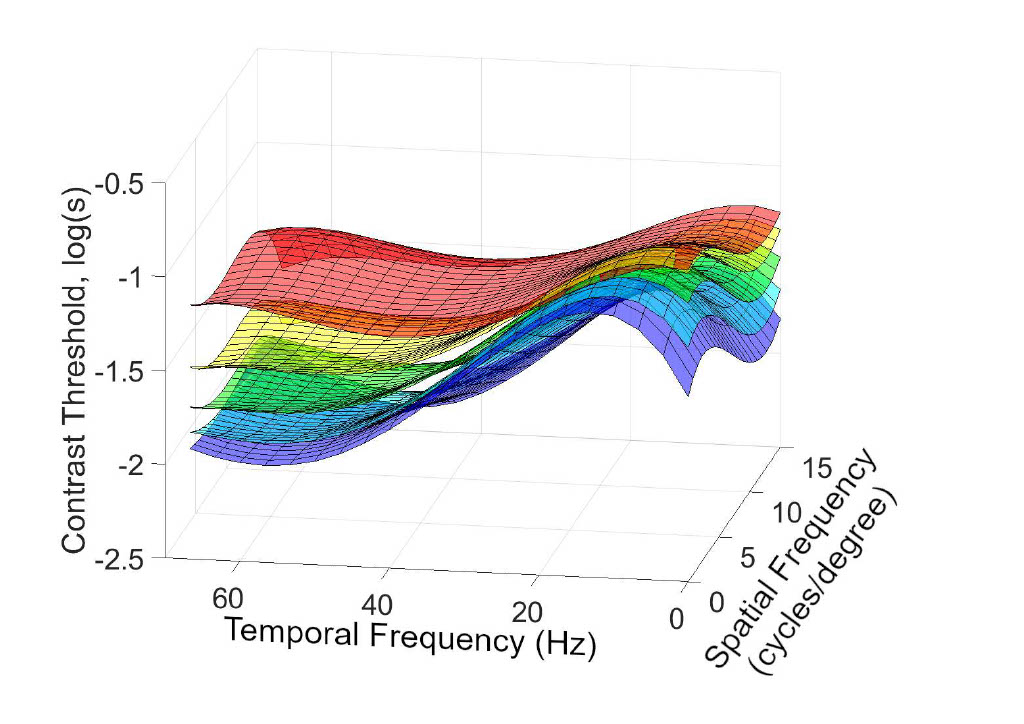

The presented work analyses existing data on human perception of temporal frequencies in the central area of vision and peripheral vision. It presents a new method for measuring quality, built on new models of human contrast sensitivity, including thresholds for the visibility of spatiotemporal sinusoidal variations with different spatial dimensions and temporal modulation rates on modern displays.

Next-Generation Video AI: Unlocking Efficiency with Psychovisual Perception Models

This project will create a core of visual knowledge necessary to train Visual Artificial Intelligence. We will reduce training video dataset redundancy by leveraging new insights into human visual perception, improving internal computations and predictive accuracy.

A contrast sensitivity model of the human visual system in modern conditions for presenting video content

In this work, the 40000 thresholds of the visibility of spatio-temporal sinusoidal variations necessary to determine the artefacts that a human perceives were measured by a new method using different spatial sizes and temporal modulation rates. A multidimensional model of human contrast sensitivity in modern conditions of video content presentation is proposed based on new large-scale data obtained during the experiment.

My students are afraid of AI. Lecturers can explain to students of all disciplines how to embrace new technologies.

EIT students don't understand AI. Many view AI as an omniscient machine that will produce knowledge and be connected to the internet in the future. EIT currently offers AI courses for students about academic integrity and AI tools. However, these courses do not address students' anxiety about AI.

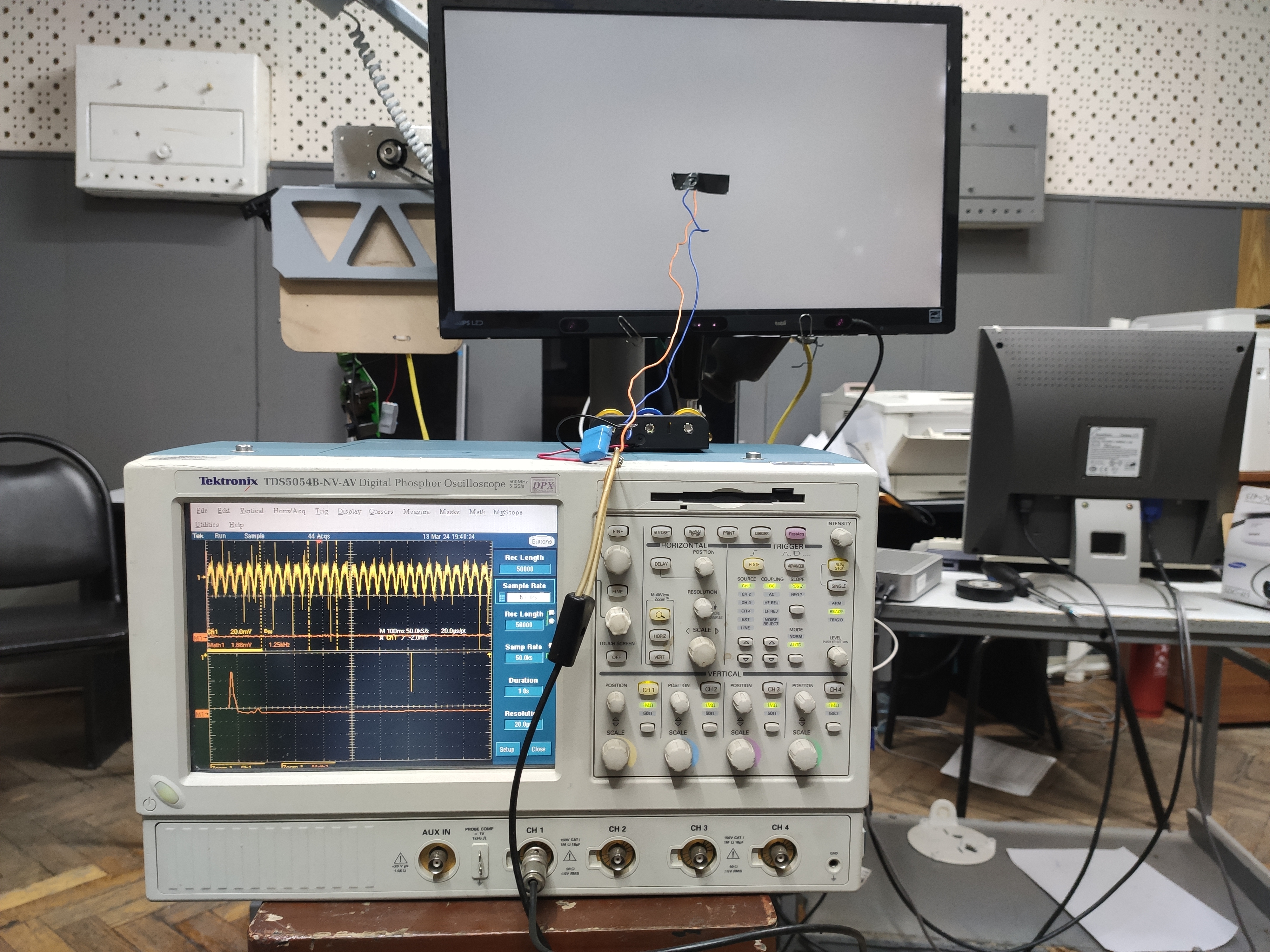

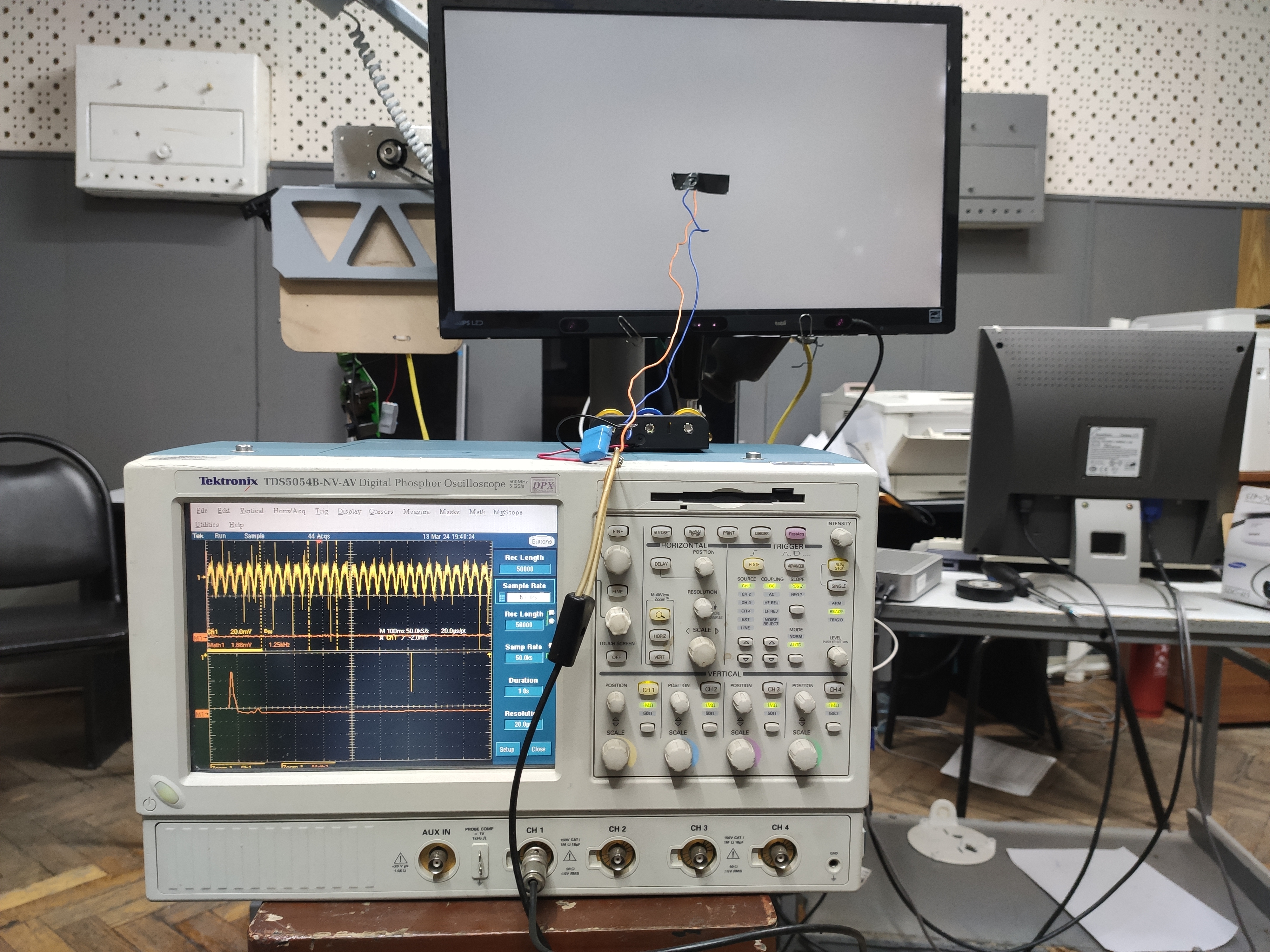

A Measurement Method and a Mathematical Model of the Human Vision System

Based on research on the limit to human vision, we offer a method, software, and test equipment for researching and measuring the characteristics of human visual systems. This set forms and evaluates stimuli with a specified combination of spatial, temporal, and color characteristics, which differ in a narrow enough spectrum for measurement.

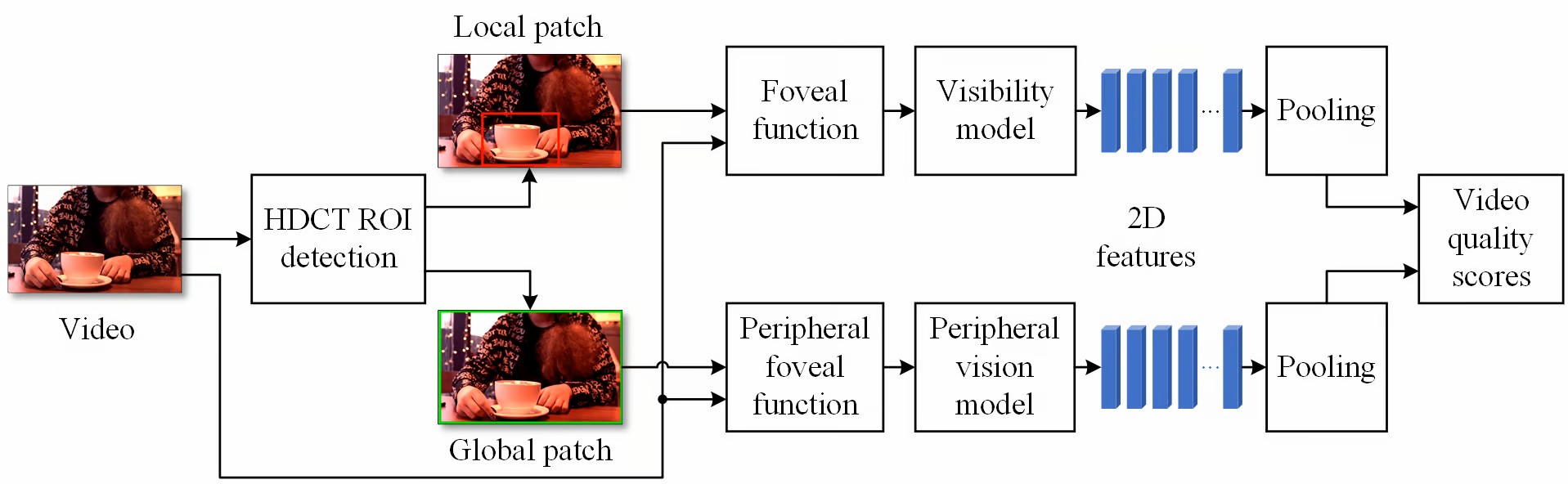

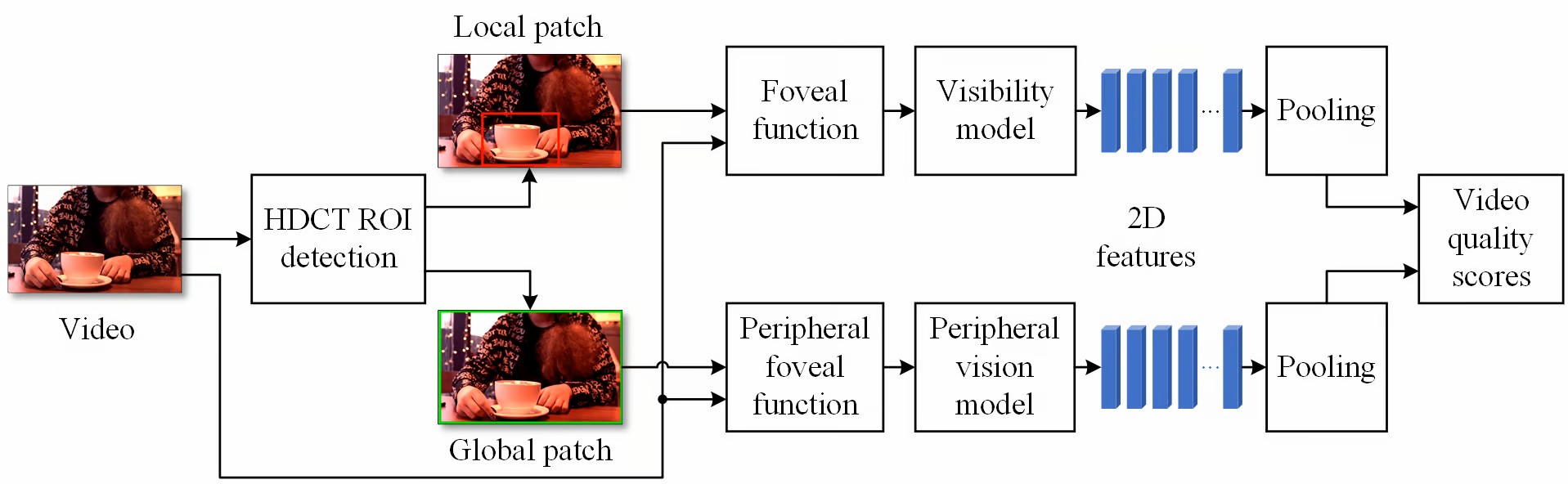

NRspttemVQA: real-time video quality assessment based on the user's visual perception

We created a new, first-ever non-reference video quality metric that includes the psychophysical features of the user's video experience. This metric provides stability in predicting the user's subjective rating of a video. Our experimental results show that the proposed video quality metric achieves the most stable performance on three independent video datasets.

Constant Subjective Quality Database: The Research and Device of Generating Video Sequences of Constant Quality

Here, we contribute to advancing the issue of streaming quality by creating a large-scale dataset with video compression and transmission artefacts. Our final dataset consists of 4.1 million video quality perceptual thresholds by users.