Abstract:

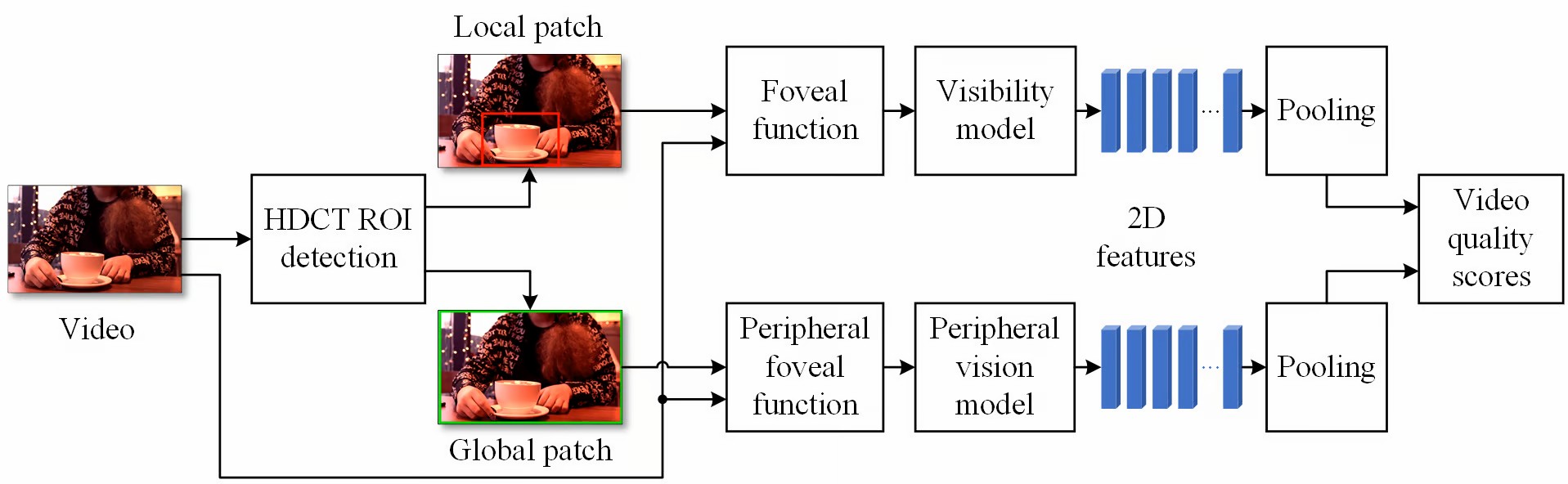

We created a new, first-ever non-reference video quality metric that includes the psychophysical features of the user's video experience. This metric provides stability in predicting the user's subjective rating of a video. Our experimental results show that the proposed video quality metric achieves the most stable performance on three independent video datasets.

Materials:

Mozhaeva A, Mazin V, Cree MJ and Streeter L (2023). NRspttemVQA: Real-Time Video Quality Assessment Based on the User’s Visual Perception, 38th International Conference on Image and Vision Computing New Zealand (IVCNZ), Palmerston North, New Zealand, pp. 1-7. doi: 10.1109/IVCNZ61134.2023.10343863.

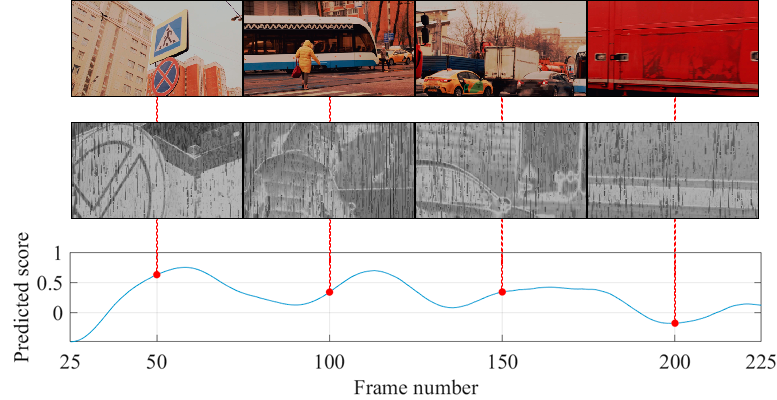

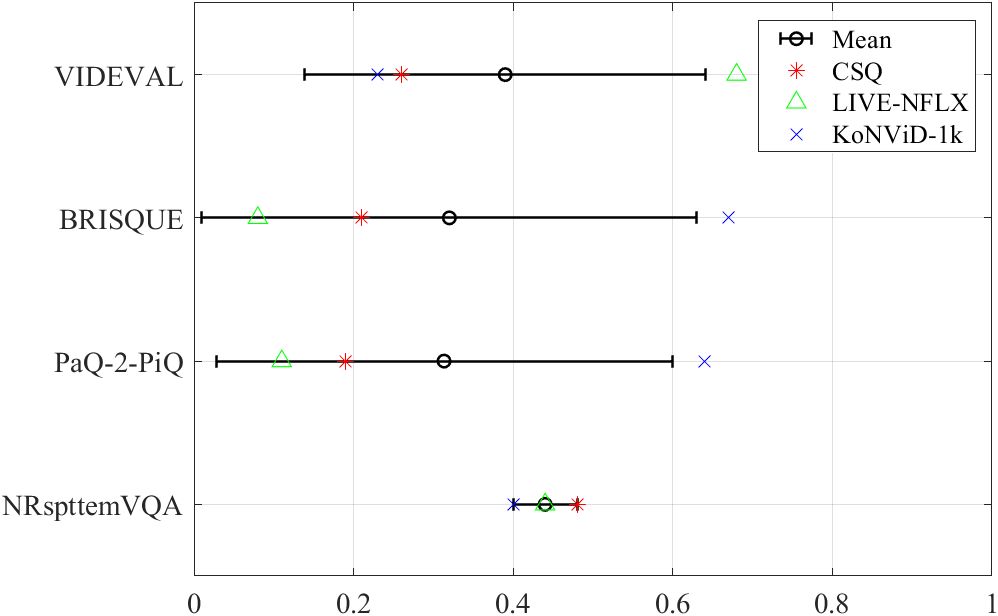

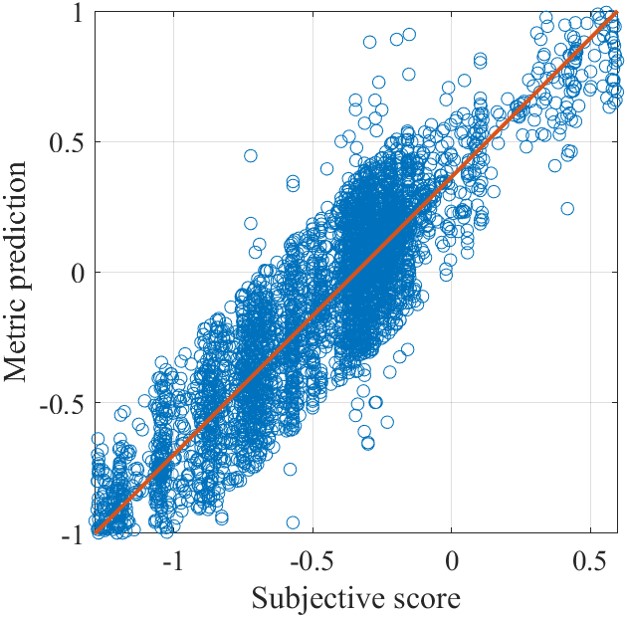

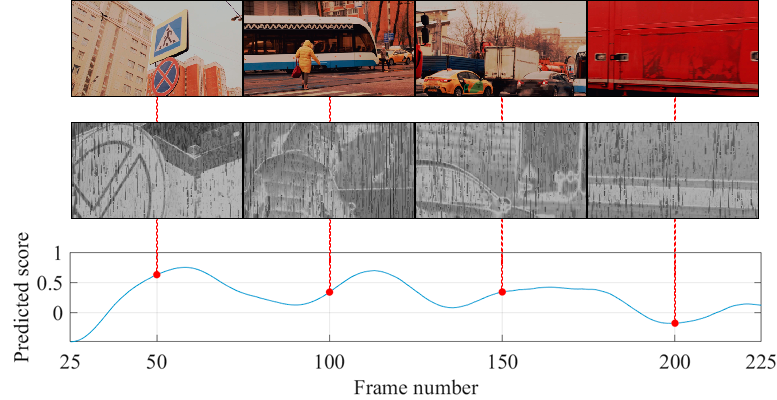

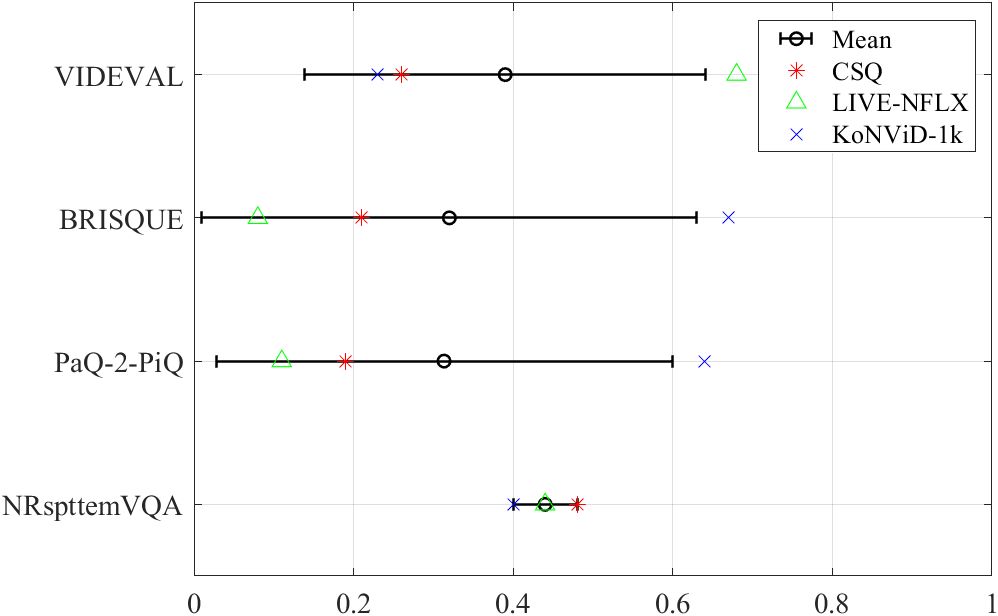

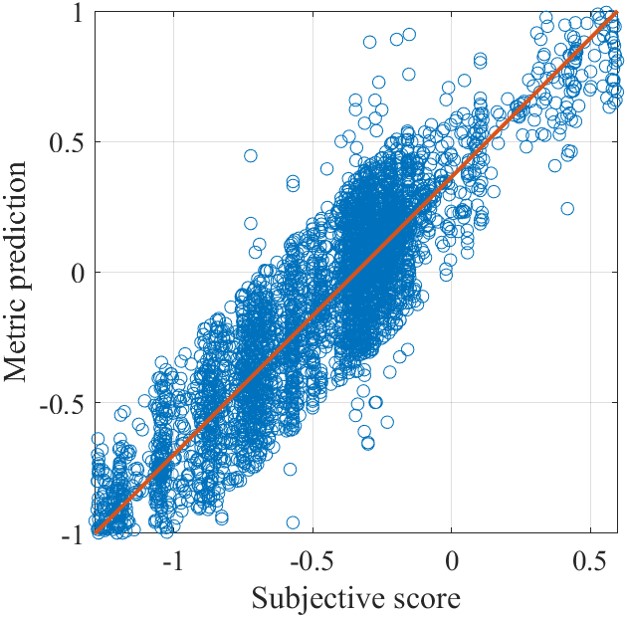

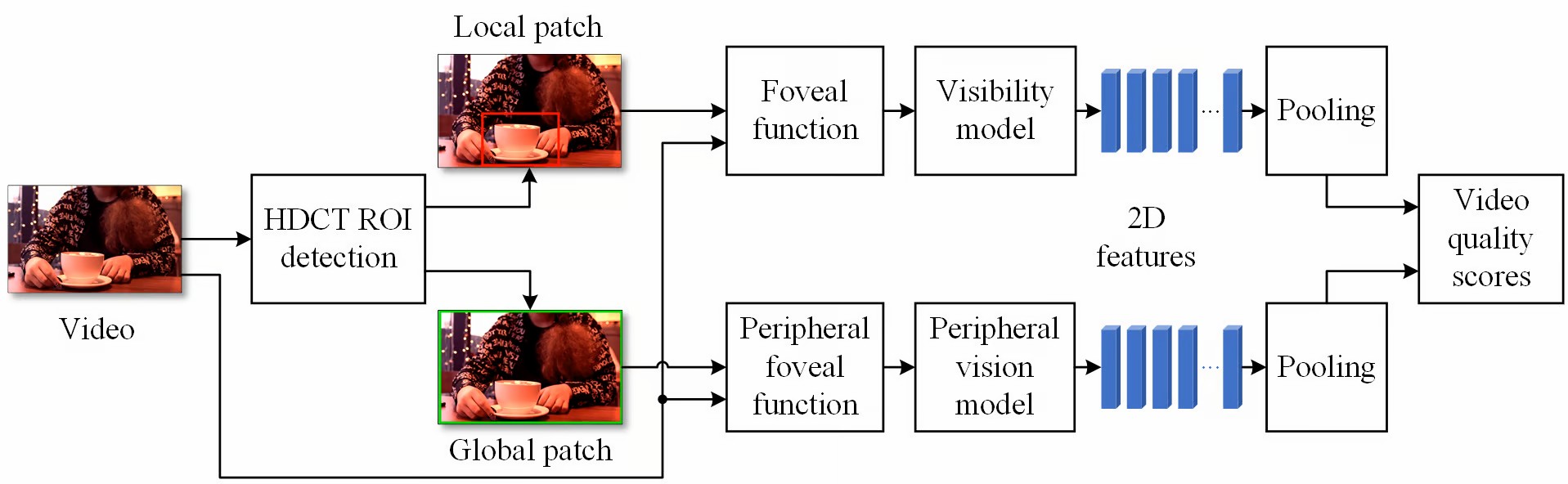

Example of spatio-temporal quality maps for the frame of the video sequence: Correlation interval of non-reference video quality metrics on video sequences CSQ, LIVE-NFLX, KoNViD-1k. The new proposed metric has the most consistent high correlation of the metrics tested herein: Visualisation of NRspttemVQA results on LIVE-NFLX database:

NRspttemVQA: real-time video quality assessment based on the user's visual perception

NRspttemVQA: real-time video quality assessment based on the user's visual perception

Dr Anastasia Mozhaeva, Eastern Institute of Technology

Dr Lee Streeter, The University Waikato

Associate Professor Michael Cree, The University Waikato

Vladimir Mazin, Moscow Technical University of Communications and Informatics

Primary contact: Anastasia Mozhaeva amozhaeva@eit.ac.nz

Code and data [Github]

Code and data [Github]

We created a new, first-ever non-reference video quality metric that includes the psychophysical features of the user's video experience. This metric provides stability in predicting the user's subjective rating of a video. Our experimental results show that the proposed video quality metric achieves the most stable performance on three independent video datasets.

Materials:

Mozhaeva A, Mazin V, Cree MJ and Streeter L (2023). NRspttemVQA: Real-Time Video Quality Assessment Based on the User’s Visual Perception, 38th International Conference on Image and Vision Computing New Zealand (IVCNZ), Palmerston North, New Zealand, pp. 1-7. doi: 10.1109/IVCNZ61134.2023.10343863.

Example of spatio-temporal quality maps for the frame of the video sequence: Correlation interval of non-reference video quality metrics on video sequences CSQ, LIVE-NFLX, KoNViD-1k. The new proposed metric has the most consistent high correlation of the metrics tested herein: Visualisation of NRspttemVQA results on LIVE-NFLX database:

Dr Anastasia Mozhaeva, Eastern Institute of Technology

Dr Lee Streeter, The University Waikato

Associate Professor Michael Cree, The University Waikato

Vladimir Mazin, Moscow Technical University of Communications and Informatics

Primary contact: Anastasia Mozhaeva amozhaeva@eit.ac.nz